Team Size: 13

Timeframe: 3 months

Sprint Timeframe: 6 weeks

Role: Interactive Editor (Lead)

Key Contributions: Challenge 1, Master Logic

Learning Prompt: Identifies Sight Words

Date Sprint Completed: April 2021

The fourth experience in Noggin’s second season of Missions; in “Robot Boogie,” the player helps Maggie surprise Mango with a bopping, dancing robot to dance with. You complete a series of challenges building this robot and then teaching it to dance, all while testing your comprehension of sight words.

My role on the team took place during a 6-week sprint timeframe starting in March 2021, with this project being the first project I contributed as lead interactive editor. As lead, you are tasked with managing the master logic in addition to the given challenge you are working on, as well as “owning” the project (i.e. keeping the core project file on your computer, running exports, and uploading to Box and gitHub). Much of the master logic is pre-pared and re-used as a template from older missions, but you still have to change out the programmatic aesthetics in your keyframes, including the menu (see below), which nests in the same keyframe, but evolves over the course of the experience based on how my challenges the player has completed.

As such, this means managing the respective tracking variables that see how many challenges have been completed, as well as the seek logic that tracks where in the source video the menu will seek to upon completion.

To balance out workload, the lead interactive editor typically is given the less complicated challenge to work on, which typically is Challenge 1 (C1) of a Mission. This also allows you to, fairly independently, complete your challenge and test it along with the master logic whilst waiting for the other challenges’ templates and updates from the other editors.

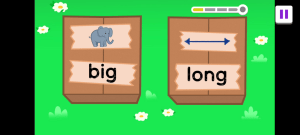

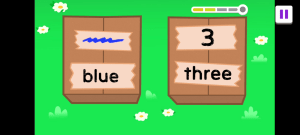

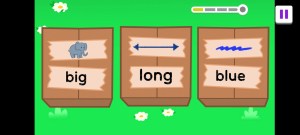

C1 in “Robot Boogie” is very straightforward touch input logic, spread out over four interactions, in which there is a correct answer (CA) and a wrong answer (WA) per interaction, and selection of the wrong answer will auto-complete the interaction. The only tricky part was matching the position of the programmatic box with the “open Box” animation created by the team’s animators.

As is the case with most Noggin missions, there are three separate “levels” in my Challenge (a total of 12 interactions), in which a player’s assigned “level” of difficulty is determined based on a player’s performance in experiences across the Noggin app.

My playthrough of this Mission is across Level 1 (L1), but Levels 2 and 3 (L2, L3) of the challenge are fairly similar to the first level in terms of logic, just with different aesthetics, and a wrong answer box added per level. This made the position randomization slightly trickier to program at higher levels, but overall it remained straightforward.

Look at Mango and the Robot go! Mission accomplished!